# load packages

library(tidyverse) # for data wrangling and visualization

library(tidymodels) # for modeling

library(openintro) # for the duke_forest dataset

library(scales) # for pretty axis labels

library(knitr) # for pretty tables

library(kableExtra) # also for pretty tables

library(patchwork) # arrange plots

# set default theme and larger font size for ggplot2

ggplot2::theme_set(ggplot2::theme_bw(base_size = 20))SLR: Conditions + Model evaluation

Sep 20, 2022

Announcements

HW 01: due TODAY at 11:59pm

Lab 03:

due Fri at 11:59pm (Tue labs)

due Sun at 11:59pm (Thu labs)

Looking ahead: Exam 01:

Closed note in-class: Wed, Oct 4

Open note take-home: Wed, Oct 4 - Fri, Oct 6

- Released after Section 002

More about the exam next week

Questions from last class?

Computational set up

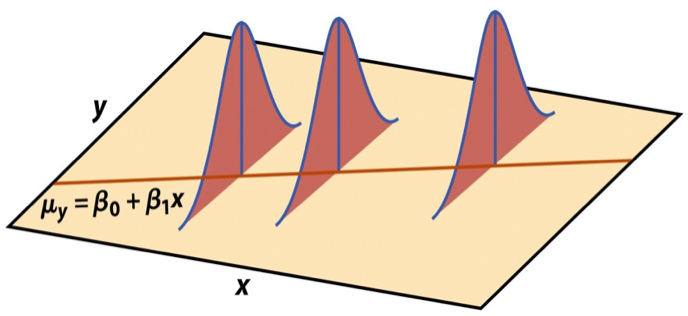

Regression model, revisited

Mathematical representation, visualized

Image source: Introduction to the Practice of Statistics (5th ed)

Model conditions

Model conditions

- Linearity: There is a linear relationship between the outcome and predictor variables

- Constant variance: The variability of the errors is equal for all values of the predictor variable

- Normality: The errors follow a normal distribution

- Independence: The errors are independent from each other

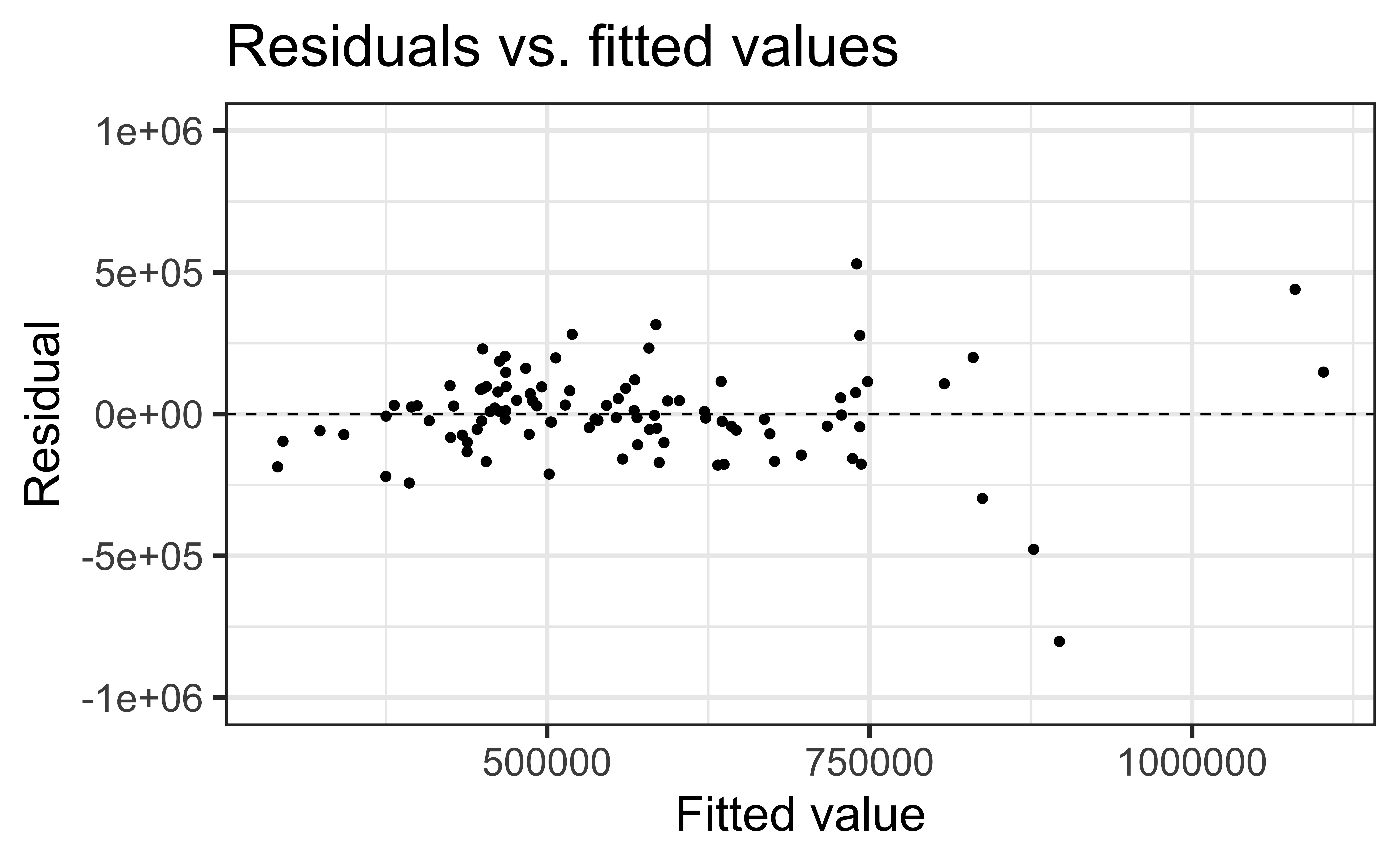

Linearity

If the linear model,

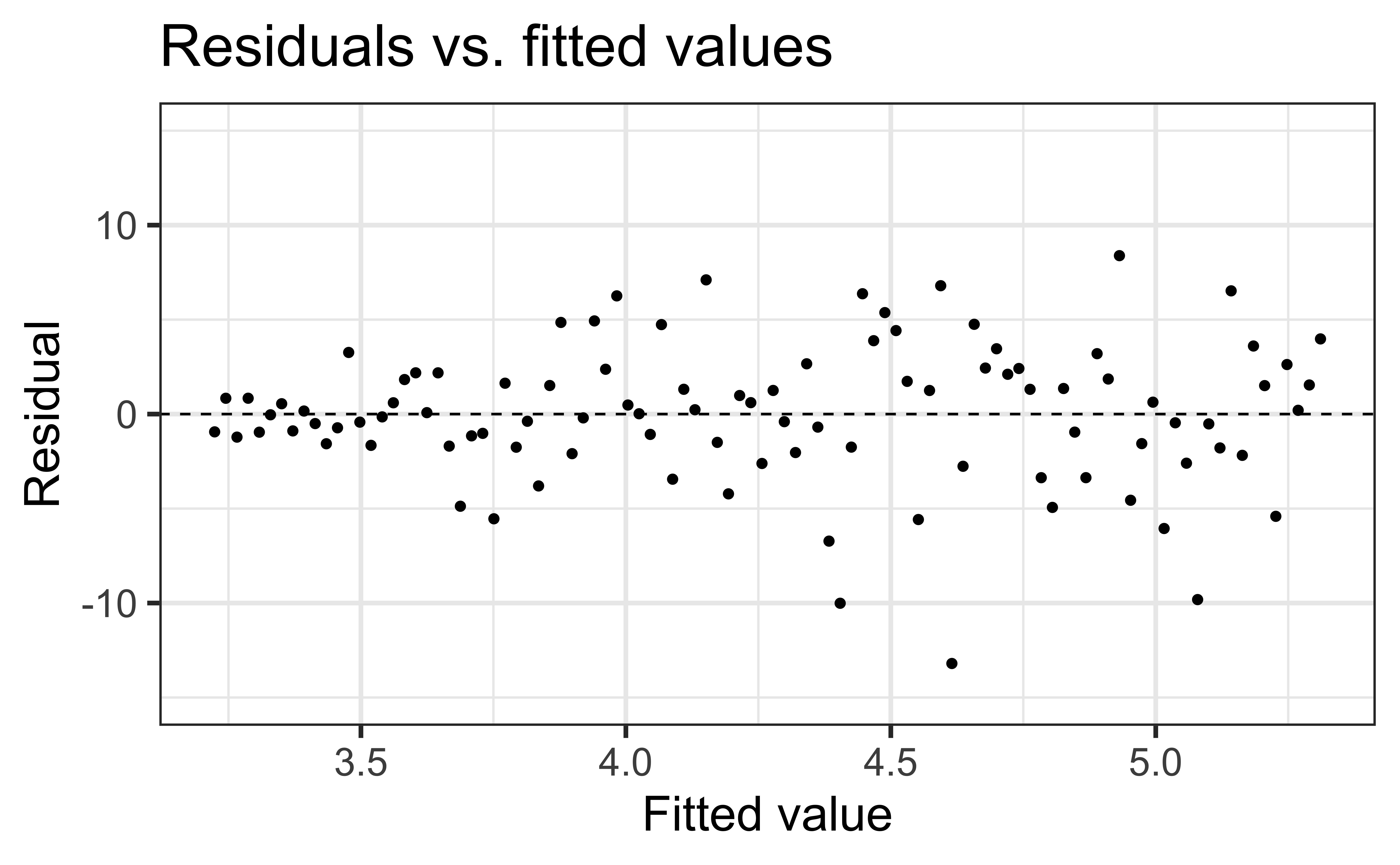

To assess this, we can look at a plot of the residuals vs. the fitted values

Linearity satisfied if there is no distinguishable pattern in the residuals plot, i.e. the residuals should be randomly scattered

A non-random pattern (e.g. a parabola) suggests a linear model does not adequately describe the relationship between

Linearity

✅ The residuals vs. fitted values plot should show a random scatter of residuals (no distinguishable pattern or structure)

Residuals vs. fitted values (code)

Non-linear relationships

Constant variance

If the spread of the distribution of

To assess this, we can look at a plot of the residuals vs. the fitted values

Constant variance satisfied if the vertical spread of the residuals is approximately equal as you move from left to right (i.e. there is no “fan” pattern)

A fan pattern suggests the constant variance assumption is not satisfied and transformation or some other remedy is required (more on this later in the semester)

Constant variance

✅ The vertical spread of the residuals is relatively constant across the plot

Non-constant variance

Normality

The linear model assumes that the distribution of

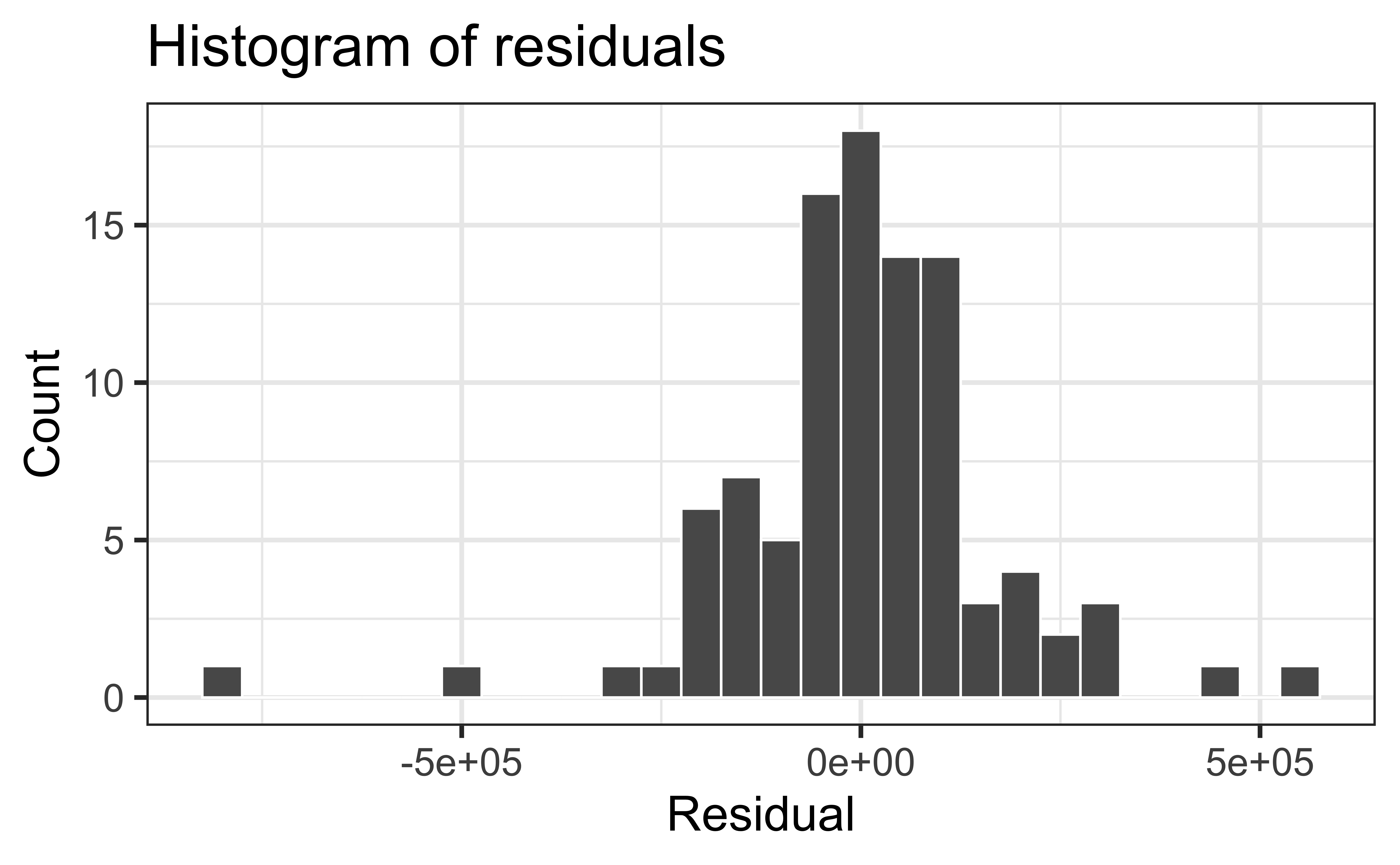

This is impossible to check in practice, so we will look at the overall distribution of the residuals to assess if the normality assumption is satisfied

Normality satisfied if a histogram of the residuals is approximately normal

- Can also check that the points on a normal QQ-plot falls along a diagonal line

Most inferential methods for regression are robust to some departures from normality, so we can proceed with inference if the sample size is sufficiently large,

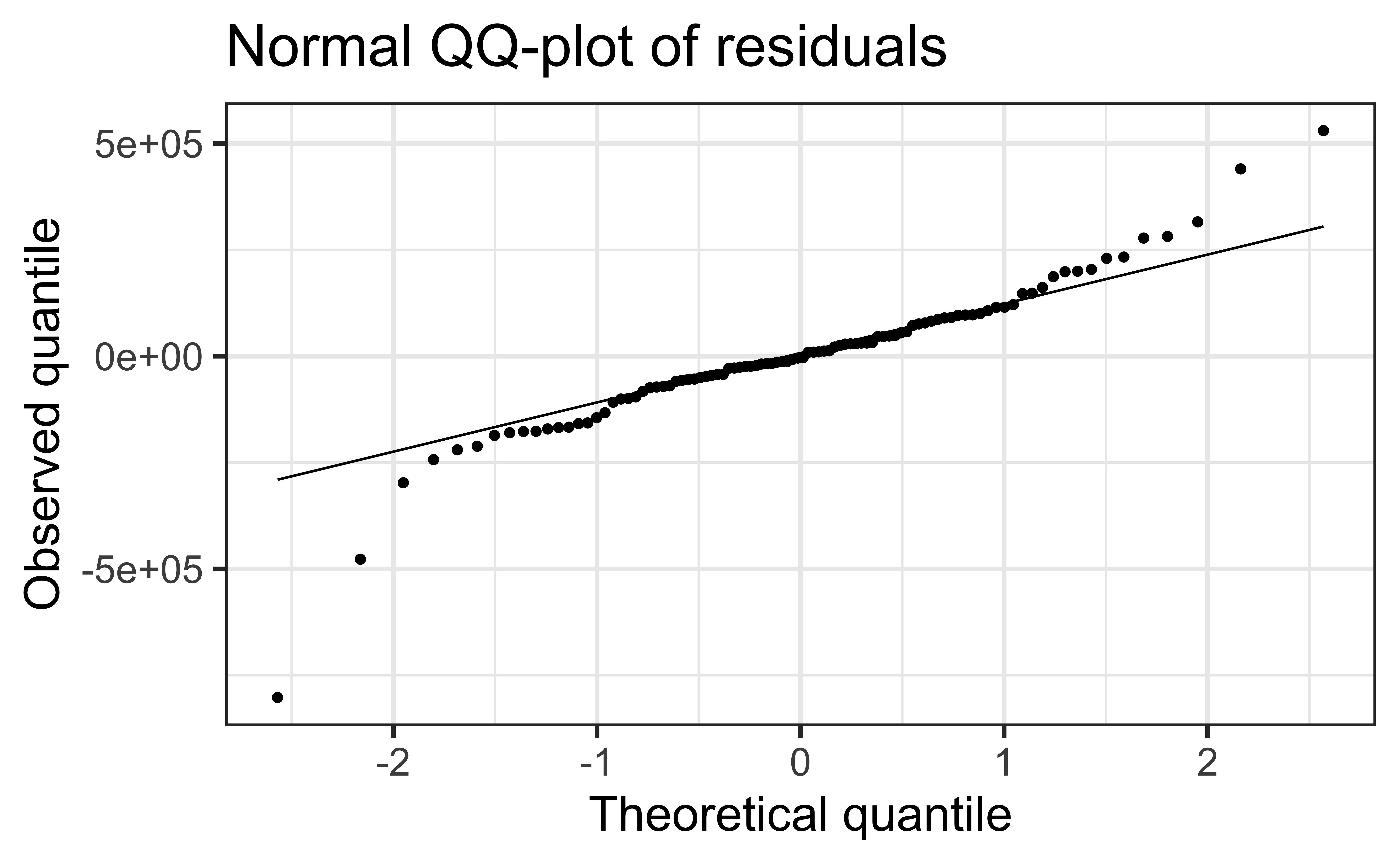

Normality

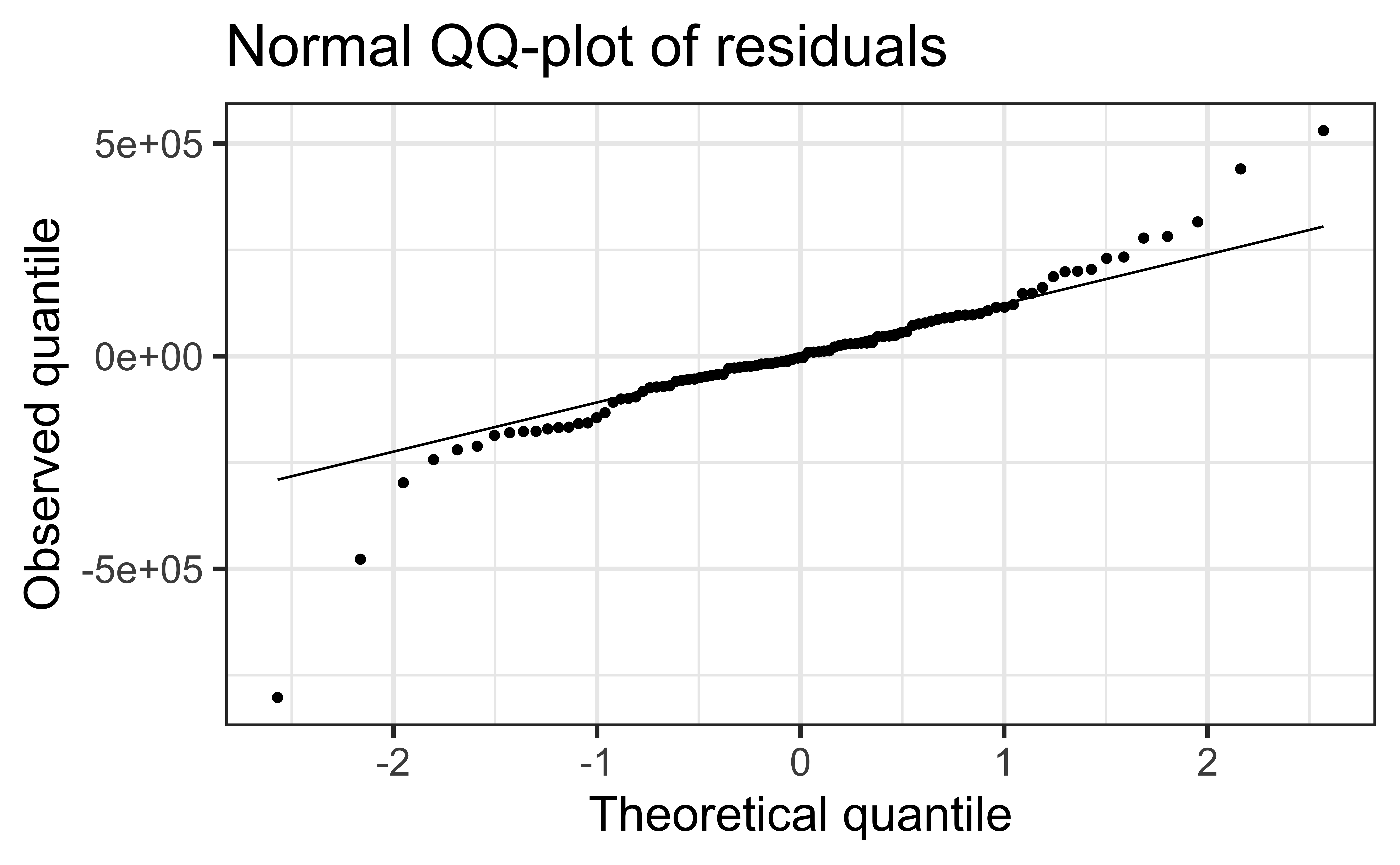

Check normality using a QQ-plot

Code

Assess whether residuals lie along the diagonal line of the Quantile-quantile plot (QQ-plot).

If so, the residuals are normally distributed.

Normality

❌ The residuals do not appear to follow a normal distribution, because the points do not lie on the diagonal line, so normality is not satisfied.

✅ The sample size

Independence

We can often check the independence assumption based on the context of the data and how the observations were collected

Two common violations of the independence assumption:

Serial Effect: If the data were collected over time, plot the residuals in time order to see if there is a pattern (serial correlation)

Cluster Effect: If there are subgroups represented in the data that are not accounted for in the model (e.g., type of house), you can color the points in the residual plots by group to see if the model systematically over or under predicts for a particular subgroup

Independence

Recall the description of the data:

Data on houses that were sold in the Duke Forest neighborhood of Durham, NC around November 2020

Scraped from Zillow

✅ Based on the information we have, we can reasonably treat this as a random sample of Duke Forest Houses and assume the error for one house does not tell us anything about the error for another house.

Recap

Used residual plots to check conditions for SLR:

- Linearity

- Constant variance

- Normality

- Independence

Which of these conditions are required for fitting a SLR? Which for simulation-based inference for the slope for an SLR? Which for inference with mathematical models?

Ed Discussion [Section 001][Section 002]

03:00

Comparing inferential methods

What are the advantages of using simulation-based inference methods? What are the advantages of using inference methods based on mathematical models?

Under what scenario(s) would you prefer to use simulation-based methods? Under what scenario(s) would you prefer to use methods based on mathematical models?

02:00

Application exercise

Model evaluation

Two statistics

R-squared,

Root mean square error, RMSE: A measure of the average error (average difference between observed and predicted values of the outcome)

What indicates a good model fit? Higher or lower

Interpreting

🗳️ Discussion

The

- Area correctly predicts 44.5% of price for houses in Duke Forest.

- 44.5% of the variability in price for houses in Duke Forest can be explained by area.

- 44.5% of the variability in area for houses in Duke Forest can be explained by price

- 44.5% of the time price for houses in Duke Forest can be predicted by area.

Alternative approach for

Alternatively, use glance() to construct a single row summary of the model fit, including

# A tibble: 1 × 12

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0.445 0.439 168798. 77.0 6.29e-14 1 -1318. 2641. 2649.

# ℹ 3 more variables: deviance <dbl>, df.residual <int>, nobs <int>RMSE

Ranges between 0 (perfect predictor) and infinity (terrible predictor)

Same units as the response variable

Calculate with

rmse()using the augmented data:The value of RMSE is not very meaningful on its own, but it’s useful for comparing across models (more on this when we get to regression with multiple predictors)

Obtaining

Use

rsq()andrmse(), respectivelyFirst argument: data frame containing

truthandestimatecolumnsSecond argument: name of the column containing

truth(observed outcome)Third argument: name of the column containing

estimate(predicted outcome)

Application exercise